As consultants at Angular Architects, we have the opportunity to help our clients write better software with architecture that can scale beyond a one-man army.

However, not every program starts as a greenfield project. Even when it does, the architecture we choose could turn out to be problematic or fail to scale.

When customers request an architecture review, they typically fall into one of these categories:

- Validation Seekers — They believe their architecture is solid but want to identify minor areas for improvement

- Rescue Pilots — This usually comes from management after noticing slower feature delivery or complete stoppage. For example, when a lead developer suggests a rewrite to improve the situation, management needs an unbiased second opinion.

- Growth Challengers — Our customers face challenges ranging from backend architecture (handling hundreds of thousands of concurrent requests) to frontend performance (such as tables becoming sluggish or in general bad Lighthouse performance scoring)

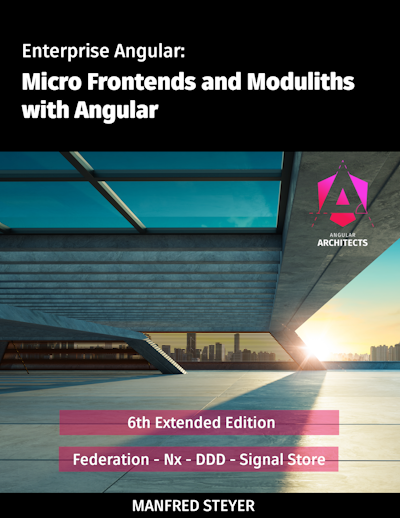

When we get an inquiry we first make an appointment with the management to plan our investigation on the code base. Here it’s important to collect the information why we are doing the architecture review or better what are the current pain points.

Let me pause here—architecture review is a delicate topic. We don't judge people or their decisions; instead, we take a snapshot of the current state. This snapshot needs a clear perspective, or more precisely, a defined goal. If the review precedes an acquisition, we must verify that the software can deliver what was promised during the sale. In other cases, we might encounter a company that has stopped delivering features due to an imbalance between testing and development—creating tests everywhere without purpose. Here, we focus on the process, examining whether the test cases make sense and how they're implemented. Every code review is unique. Sometimes we investigate performance issues only to uncover poor architecture or misguided premature optimization. Since we can't cover everything, I'll create an artificial code review example that combines various experiences from past projects.

Let's consider an example where a company called E-Corp requested an architecture review of their cloud application. The frontend uses Angular, while the backend is built with C# and structured as a distributed monolith. E-Corp rebuilt their solution from scratch three years ago because their previous system was over a decade old and had become unmaintainable—most of the original developers had left the company, leading to what's known as "brain drain."

After our initial management interview, we established our primary goal: to improve the architecture and processes between frontend and backend to accelerate feature delivery. Additionally, we planned to evaluate the existing tests to assess their implementation and effectiveness.

I requested access to the source code to create an initial analysis (for simplicity, we'll focus here only on the frontend part, though in our code reviews we take a holistic approach by examining the entire software product).

The first step is to bootstrap the software locally. Typically, we expect to run:

npm install && npm startIf that doesn't work, I examine the README.MD and package.json files to find documentation for setting up the project.

The E-Corp app launched and opened a browser window displaying a login screen. Through the network tab, I discovered they were running a central development server.

Here are my initial suggestions:

To improve the frontend development process, we could either use docker-compose (which would include the backend and a seeded database—feasible only for smaller apps) or implement a mock backend.

I prefer the mock backend approach, where we have several tools at our disposal. We could use Mockoon, Postman, or Hoppscotch to generate fake responses.

While these tools work well, the simplest approach is creating a Node Express application with the openapi-backend package. You can feed your YAML definition into it and register functions for each endpoint, like this:

const api = OpenAPIBackend({

definition: 'public/openapi.yml'

});

api.register({

getItems: (c, req, res) =>

res.status(200).json( [ {id: 1, name: "CodeRabbit"} ] )

});The next step is to run automated tools to gather key metrics from the source code. We use tools like SonarQube and JetBrains Qodana for initial measurements and insights. While running these, I also employ additional scanners like detect-secrets, snyk, **semgrep and horusec.** This information-gathering phase helps build an overall picture.

I pay particular attention to metrics such as:

- Cyclomatic Complexity (McCabe)

- Afferent Coupling / Efferent Coupling

- Lines of Code

- Code Coverage

- …

However, these metrics need proper context—we can't simply say:

"We found a Cyclomatic Complexity of 93, but best practices suggest 10"

Instead, we need to understand the specific requirements and constraints of the project to make meaningful recommendations for improvement.

Sometimes code is complex because people need to implement novel solutions that may not follow best practices, simply because no one has solved the problem that way before. Coming from a C++ background, I understand that code can look messy when optimising for performance—whether we're working around limitations in opcode generation or reducing virtual function call overhead to save milliseconds of runtime.

After the basics are done we are ready to dive into architectural pattern detection. As I dive into the rabbit hole I start to identify how the modules are organised or how the code in general is organised. For that very purpose I am using Detective (There is a separate blog post here) as we are using it locally the following should do the job:

npm i @softarc/detective -D

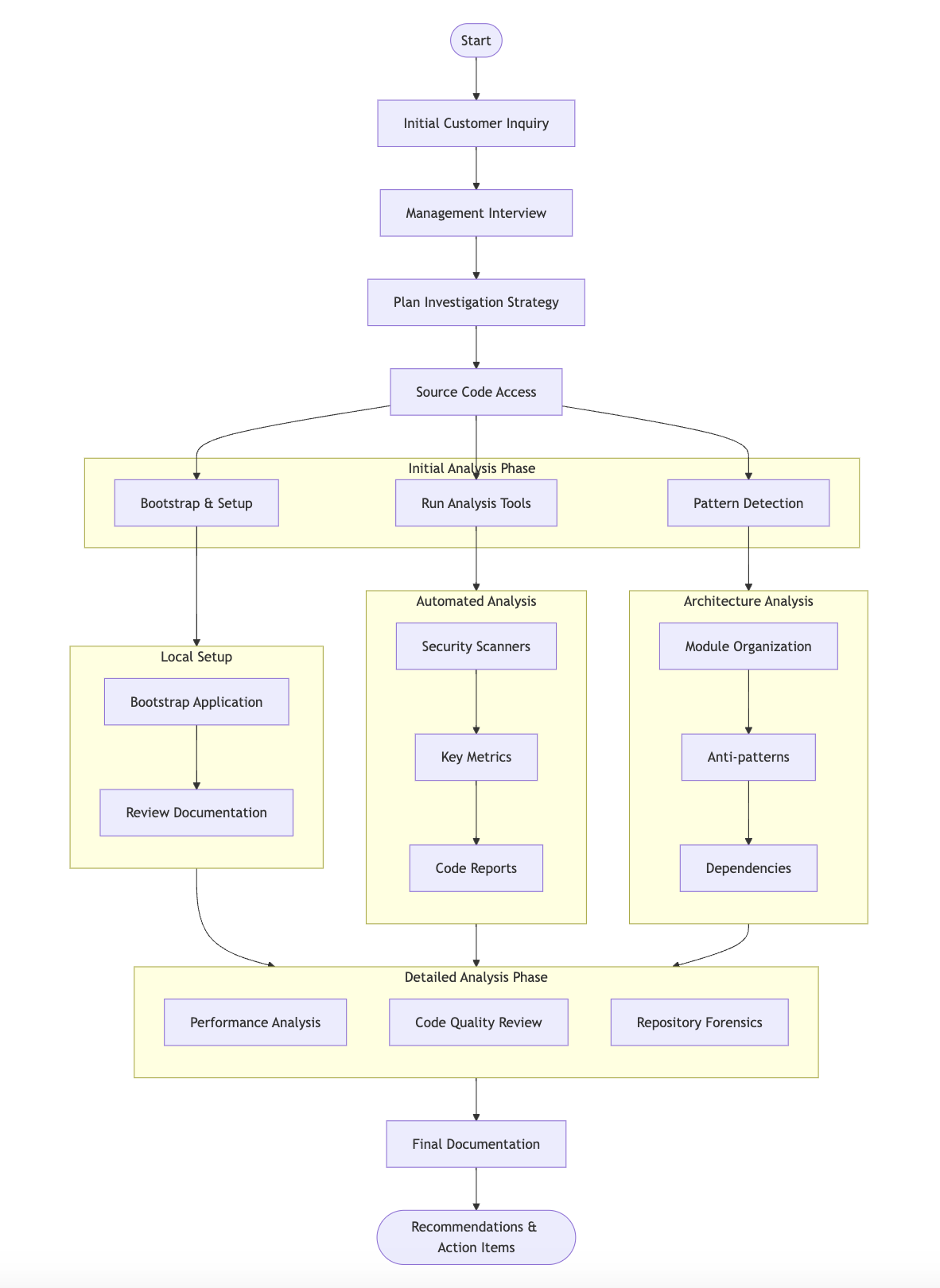

npx detectiveThe sidebar displays the current folder structure of the project, E-Corps frontend team has created some top level folders under the src folder:

src

|- api

|- shared

|- services

|- store

|- viewsAfter seeing this structure, I thought "Okay, this looks like a technical folder organization with a layered approach." To verify my assumption, I used Detective.

After selecting these folders, Detective generated a helpful graph (based on the TypeScript imports) that revealed a coarse dependency mapping. The graph showed that while the architecture had some downsides, there were clear opportunities for improvement.

The analysis reveals several architectural anti-patterns, including circular dependencies and layer violations.

These anti-patterns include:

- Modularity Concerns

- Circular dependencies and unclear module boundaries blur the separation between components

- Risk Factor Interconnectedness

- Changes to the codebase can lead to unintended side effects

- Tight coupling makes maintenance and testing more complex

- Development Challenges

- Reduced code predictability

- Increased difficulty in isolating and testing components

- Higher risk when making changes to shared dependencies

As reviewers, we see clear potential for improving the codebase.

To achieve this, we recommend implementing a layered approach and protecting our verticals using either the eslint plugin from nx (@nx/enforce-module-boundaries) or @softarc/sheriff. Both tools can prevent architectural errors at commit time through precommit hooks with husky.

*If you're interested in learning more about protecting enterprise architecture, consider our training course Advanced Angular – Architektur Workshop.

Now I went into the API module to uncover the following:

- The http requests are completely hand-rolled

- Here we should use a BFF (Backend For Frontend) and scaffold the code based on the yaml file

- Nowhere any typings expect of ‘any’

- API layer pushed into the global store

- API layer sometime called services from the service layer

These are all issues that can be fixed, but they should be planned as actionable items to be addressed either by the customer or as part of our consulting agreements.

E-Corp mentioned that the frontend team has 4-5 developers working on the codebase. As part of the review, we first examine the repository to assess the "bus factor" risk—the possibility that a single developer holds critical knowledge, which could impede feature delivery if that person leaves the team.

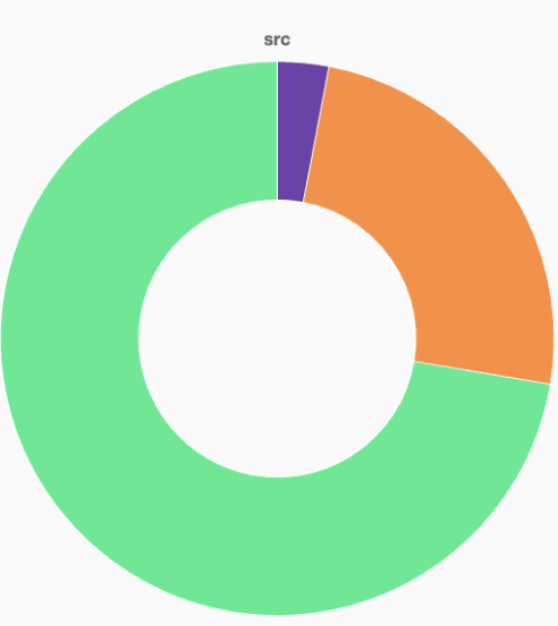

Using Detective's hotspot analysis, I selected the src folder to reveal a pie chart showing code contributions. After filtering to the last 12 months, our tool showed that only three people had contributed to the codebase, with one developer responsible for over 70% of the code.

Further analysis revealed that other developers worked primarily in non-critical areas—indicating a clear bus factor risk.

Git analysis also revealed a strong change coupling issue. Components that were frequently modified together during check-ins suggested an architectural misalignment. The thickness of connecting lines indicates the strength of coupling between modules.

The commit pattern analysis reveals a lack of meaningful commit messages, with many simply stating "fix" or "update". This is particularly problematic for E-Corp because there's no connection between tickets and git commits. Additionally, such a vague git history makes it difficult to use git bisect effectively when searching for bugs introduced during development.

From here on, creating the documentation requires extensive analysis. I examine each module in detail to identify patterns within the codebase.

My detailed review checklist includes:

- Performance archaeology

- API usage patterns

- State management assessment

- Code quality forensics

- Component coupling analysis

- Design system evaluation

- TypeScript implementation analysis

- Type system utilization

- Generic patterns

- Common type pitfalls

- Repository forensics

- Commit patterns analysis

- Branch strategy evaluation

- Code ownership patterns

- Code review practices

I hope this brief example has provided insight into our work beyond training. If you'd like to learn how E-Corp's story continues, consider booking us for an architecture review.

Request Your Review Now!

If you are interested in a review to get feedback and and recommendations for improvement for your application, feel free to send us a request.

Linked Resources

Here's an appendix of the linked resources mentioned in the document:

Performance & Testing Tools

- Lighthouse Performance Scoring - https://developer.chrome.com/docs/lighthouse/performance/performance-scoring/

API & Backend Tools

- Mockoon - https://mockoon.com/

- OpenAPI Backend - https://openapistack.co/

Code Analysis & Security Tools

- JetBrains Qodana - https://www.jetbrains.com/qodana/

- detect-secrets - https://github.com/Yelp/detect-secrets

- Snyk - https://snyk.io/code-checker/typescript/

- Semgrep - https://github.com/semgrep/semgrep

- Horusec - https://github.com/ZupIT/horusec

Architecture & Development Tools

- Detective - https://github.com/angular-architects/detective

- Nx Module Boundaries - https://nx.dev/features/enforce-module-boundaries

- Softarc Sheriff - https://sheriff.softarc.io/

Documentation & Research

- McCabe Cyclomatic Complexity Paper - http://mccabe.com/pdf/mccabe-nist235r.pdf

Training Resources

- Advanced Angular Architecture Workshop - https://www.angulararchitects.io/angular-schulungen/